In this article we’ll take a look at how an A/B testing survey can give businesses the confidence to move forward with an idea, product, website, or other business asset.

We’ll look at how to conduct A/B testing, common mistakes to avoid, and when A/B testing would work best for your research objectives.

Table of Contents:

What is A/B testing in survey research?

A/B testing, also known as split testing, is an efficient and reliable advanced market research methodology that screens different versions of an idea - be it communication styles, a product concept, or an idea for a new service. A/B testing is versatile, quick, and conclusive - meaning that businesses can confidently act on their A/B test results.

The A/B testing process is relatively straightforward. Two or more different versions of something are tested - various versions of a landing page for example, or varying package designs. In an example with just two versions, Version A is often the control - say, the existing landing page on a website, or the existing package design. Version B would be an amended version, with a different design or new features that businesses can use to gauge consumers’ reactions.

Equally structured groups of respondents are each shown one version of the asset being tested. The responses to each version reveal preference or usage differences, indicating which version is most likely to have the desired effect in a real-world scenario (e.g. the highest click-through rates, the highest likelihood of purchase, or the most engaging user experience.)

Because each group of respondents only sees one version of an asset, they give all their attention to that one idea without having to compare and contrast different variations (this is another type of testing called multivariate testing). Because respondents are only reviewing one asset in an A/B test, they can give an authentic and unbiased evaluation of that asset alone.

How to conduct A/B testing on quantilope’s platform

As with all other automated advanced research methods on quantilope’s Consumer Intelligence Platform, it’s very straightforward to set up an A/B test. Platform users can choose to start with an automated survey template, or build their A/B test from scratch with drag & drop modules from a pre-programmed question library. In both cases, all questions are 100% customizable to each research team’s needs.

To start with a template, open the quantilope platform and click ‘start new project’. From there, you can choose a template that relates to your business need for an A/B test (for example, a concept test). With this approach, all survey questions relevant to your business need (i.e. concept testing) are automatically populated into your survey so you don't risk forgetting relevant questions and metrics.

If you’d prefer to start from scratch, the process is the same, as you simply click ‘start a new project’. But rather than starting with an entirely populated survey (as you do with a template), you’d select each relevant question (including your A/B test) from the pre-programmed library drop-down.

Below is a brief demo showing how easy it is to drag and drop an A/B test into your survey from quantilope's pre-programmed library:

You can choose to test as many different assets as you’d like, and quantilope’s machine-learning algorithms will automatically ensure that your total sample is evenly split among all test groups, with each respondent seeing only one asset.

Monitor your response rate in real-time as respondents complete your survey, and begin building analysis charts that will automatically update with new data as it’s available throughout fielding. You can even begin adding analysis charts to a final dashboard before fieldwork is complete, as these will automatically update in the dashboard as well.

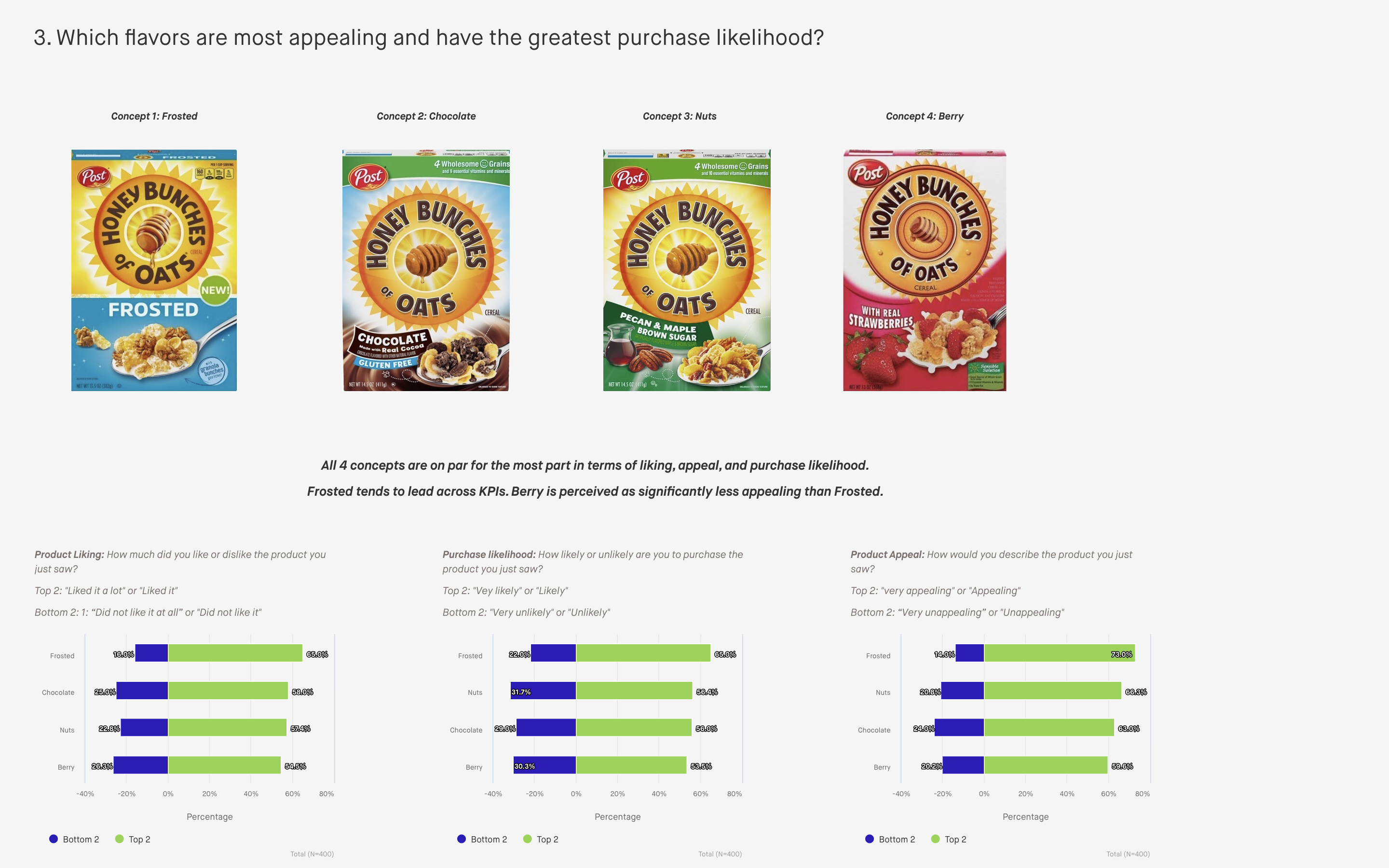

For an example of an A/B test used in a concept testing study, check out quantilope's syndicated cereal study, with a sneak peek of the dashboard below:

Common mistakes to avoid when conducting an A/B test

While A/B testing is a valuable research methodology, it needs to be set up and leveraged correctly for reliable results.

Below are a few common mistakes to be aware of when creating your A/B study:

-

Treating it as a one-off exercise

A/B testing should be an iterative, ongoing part of CRO (conversion rate optimization), fitting into a wider, carefully planned strategy rather than being launched on a whim for one specific need. Even if the results are promising in your initial A/B test results, it’s best practice to further test your top-performing concept or group of features to optimize the overall offer. What may have worked in your first test might not be favored as highly in subsequent testing when compared to other options.

In addition to identifying the winning idea from the group you’ve tested, areas for optimization also arise from the results. Is the font color too light or too small? Do the images not fit with the brand image? These are all optimizations that can be considered when moving into the next round of testing.

-

Insufficient preparation

While an A/B test itself can be relatively quick, especially on an automated research platform like quantilope’s, the preparation that goes into it shouldn’t be. Planning and prioritizing is key with A/B testing so that the final elements tested have been carefully thought through for truly actionable results.

Preparing means gathering all relevant information around the idea before beginning your official test in a respondent survey; for a website page A/B test, this might mean looking at visitor activity patterns and heat maps to see what customers do on your page or looking into key metrics like bounce rates and length of site visit. Analyzing how your target audience has engaged with your existing page will help you form a hypothesis on which elements of your page to change. Once you know which changes you want to make, only then should you begin to craft your actual A/B test using the information you’ve already gathered. -

Focusing on the wrong areas

This relates closely to your hypothesis and initial preparation. You need to be sure that the effort you’re putting into your study will lead to actionable, strategic, and beneficial outcomes for your business. One way to ensure you’re focusing on the right information is to run preliminary internal research that points to potential problem areas - maybe a UX study that shows respondents are overlooking a call-to-action (CTA) on your web page, or a pricing study that points to general perceptions before making concrete pricing changes. Any type of study that can hone in on your current customer experience and conversion rates before narrowing down to an official A/B test will increase your chances of having a positive final outcome.

-

Testing too many elements at once

If you’re trying to improve a website page (for example), don’t try to change the website’s color scheme, the location and shape of the call-to-action button, the wording of the page, and the layout all at once; it will be impossible to isolate the factor(s) that are positively affecting customer usability. Instead, choose one or two factors (like color scheme and page layout) and, once those have been tested, build upon the findings in further rounds of research that explore those other factors too (like the CTA button and page wording).

-

Ignoring statistical significance

Ignoring or failing to implement statistical significance testing leaves room for researcher bias or personal opinion when analyzing your A/B test results. It’s easy to let your own preferences for an idea sneak its way into the research, but remember that it’s your audience who will decide what works and what doesn’t. Statistical testing mitigates these biases so you’re only focusing on the top-performing metrics based on actual data.

-

Using the wrong sample size

Speaking of statistical testing, surveying your A/B Test among too small of a sample size will affect the credibility of your findings. Consult with your research team or platform’s recommendation on an appropriate number of respondents per tested group in your A/B Test.

Back to Table of Contents

When should you conduct A/B testing in surveys?

A/B testing is particularly popular in digital marketing strategies, as brands can quickly test different variations of a home page, email, or social media. Digital communication is so competitive these days that it pays to optimize each piece of messaging in order for it to stand out. It’s also quite common in concept testing studies to narrow in on the final features for a new product idea.

Below are a few specific reasons to leverage A/B testing in your market research approach.

-

To resolve pain points:

A/B testing can help determine specific points of customer frustration - whether it’s website visitors’ ease of navigation, customers’ product usability, or any scenario that could be causing a customer pain point. Determining - and then resolving, customer pain points could drastically improve conversion rates or product loyalty.

Run tests with an A/B approach to find what those pain points are, and then measure your resolved approach in new, different versions of a webpage or in different iterations of a product prototype. -

To improve ROI

If you already have loyal customers, one way to improve your ROI is to build their new and changing preferences into existing offers or to craft new product offers so they’ll increase their spend with you. An A/B testing tool allows you to present different versions of these product improvements so that you can explore which elements encourage greater engagement and buy-in.

-

To reduce website bounce rate

High website bounce rates occur when visitor expectations aren’t met. This might be a lack of information on the site, an inability to find what customers are looking for, or confusion around the site’s layout. A/B testing allows you to test as many configurations of different elements on your website as you want so that you can identify what causes website visitors to drop - and what encourages them to stay. As a result, user experience is improved, visitors spend more time on the site, and more website visits convert into sales.

-

To make low-risk changes

When you’re unsure whether an entire overhaul is needed, it often pays to just make some small changes at a time before implementing such a drastic change. It might be changing the layout of website images, or tweaking the wording used in your marketing campaigns; it also might very well be adding an entirely new feature or offer. Whatever the change, an A/B testing tool will measure the impact of these changes so that you can move forward with confidence and low risk.

-

To determine which product should be launched into the market

On the other hand, sometimes a complete, in-depth overhaul is needed. If you want to totally revamp or launch a new website or product, an A/B test is one of the best research methodologies to confirm which final offer will be a perfect fit for your target audience. Brands can narrow in on specific features, phrasing, packaging choices, and more until they refine the asset or product to a point that’s ready for launch.

To find out more about quantilope and its automated A/B testing, get in touch below!